Zoe KleinmanTechnology editor

Zoe KleinmanTechnology editor

BBC

BBC

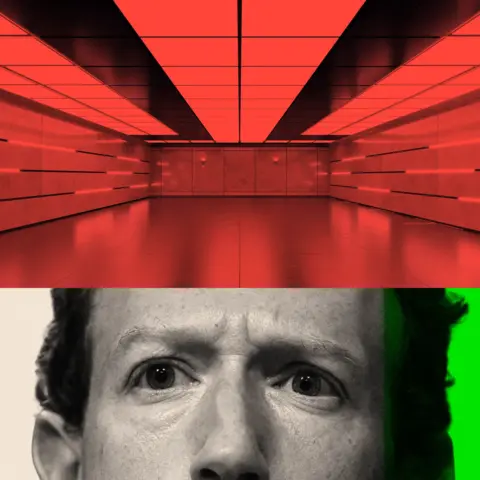

Mark Zuckerberg is said to have started work on Koolau Ranch, his sprawling 1,400-acre compound on the Hawaiian island of Kauai, as far back as 2014.

It is set to include a shelter, complete with its own energy and food supplies, though the carpenters and electricians working on the site were banned from talking about it by non-disclosure agreements, according to a report by Wired magazine. A six-foot wall blocked the project from view of a nearby road.

Asked last year if he was creating a doomsday bunker, the Facebook founder gave a flat "no". The underground space spanning some 5,000 square feet is, he explained, is "just like a little shelter, it's like a basement".

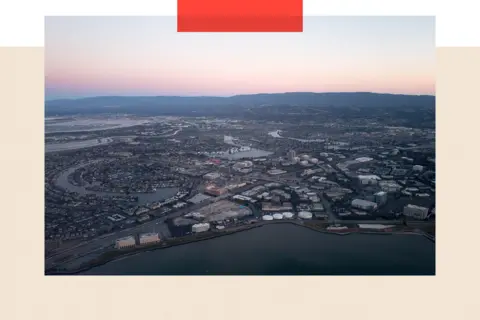

That hasn't stopped the speculation - likewise about his decision to buy 11 properties in the Crescent Park neighbourhood of Palo Alto in California, apparently adding a 7,000 square feet underground space beneath.

Though his building permits refer to basements, according to the New York Times, some of his neighbours call it a bunker. Or a billionaire's bat cave.

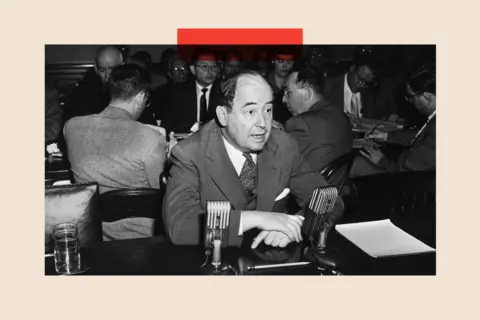

Bloomberg via Getty Images

Bloomberg via Getty Images

Then there is the speculation around other Silicon Valley billionaires, some of whom appear to have been busy buying up chunks of land with underground spaces, ripe for conversion into multi-million pound luxury bunkers.

Reid Hoffman, the co-founder of LinkedIn, has talked about "apocalypse insurance". This is something about half of the super-wealthy have, he has previously claimed, with New Zealand a popular destination for homes.

So, could they really be preparing for war, the effects of climate change, or some other catastrophic event the rest of us have yet to know about?

Getty Images

Getty Images

In the last few years, the advancement of artificial intelligence (AI) has only added to that list of potential existential woes. Many are deeply worried at the sheer speed of the progression.

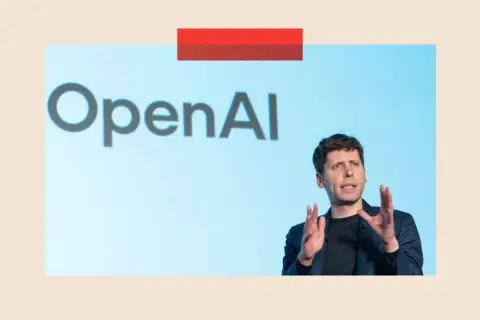

Ilya Sutskever, chief scientists and a co-founder of the technology company Open AI, is reported to be one them.

By mid-2023, the San Francisco-based firm had released ChatGPT - the chatbot now used by hundreds of millions of people across the world - and they were working fast on updates.

But by that summer, Mr Sutskever was becoming increasingly convinced that computer scientists were on the brink of developing artificial general intelligence (AGI) - the point at which machines match human intelligence - according to a book by journalist Karen Hao.

In a meeting, Mr Sutskever suggested to colleagues that they should dig an underground shelter for the company's top scientists before such a powerful technology was released on the world, Ms Hao reports.

"We're definitely going to build a bunker before we release AGI," he's widely reported to have said, though it's unclear who he meant by "we".

AFP via Getty Images

AFP via Getty Images

It sheds light on a strange fact: many leading computer scientists who are working hard to develop a hugely intelligent form of AI, also seem deeply afraid of what it could one day do.

So when exactly - if ever - will AGI arrive? And could it really prove transformational enough to make ordinary people afraid?

An arrival 'sooner than we think'

Tech billionaires have claimed that AGI is imminent. OpenAI boss Sam Altman said in December 2024 that it will come "sooner than most people in the world think".

Sir Demis Hassabis, the co-founder of DeepMind, has predicted in the next five to ten years, while Anthropic founder Dario Amodei wrote last year that his preferred term - "powerful AI" - could be with us as early as 2026.

Others are dubious. "They move the goalposts all the time," says Dame Wendy Hall, professor of computer science at Southampton University. "It depends who you talk to." We are on the phone but I can almost hear the eye-roll.

"The scientific community says AI technology is amazing," she adds, "but it's nowhere near human intelligence."

There would need to be a number of "fundamental breakthroughs" first, agrees Babak Hodjat, chief technology officer of the tech firm Cognizant.

What's more, it's unlikely to arrive as a single moment. Rather, AI is a rapidly advancing technology, it's on a journey and there are many companies around the world racing to develop their own versions of it.

But one reason the idea excites some in Silicon Valley is that it's thought to be a pre-cursor to something even more advanced: ASI, or artificial super intelligence - tech that surpasses human intelligence.

It was back in 1958 that the concept of "the singularity" was attributed posthumously to Hungarian-born mathematician John von Neumann. It refers to the moment when computer intelligence advances beyond human understanding.

Getty Images

Getty Images

More recently, the 2024 book Genesis, written by Eric Schmidt, Craig Mundy and the late Henry Kissinger, explores the idea of a super-powerful technology that becomes so efficient at decision-making and leadership we end up handing control to it completely.

It's a matter of when, not if, they argue.

Money for all, without needing a job?

Those in favour of AGI and ASI are almost evangelical about its benefits. It will find new cures for deadly diseases, solve climate change and invent an inexhaustible supply of clean energy, they argue.

Elon Musk has even claimed that super-intelligent AI could usher in an era of "universal high income".

He recently endorsed the idea that AI will become so cheap and widespread that virtually anyone will want their "own personal R2-D2 and C-3PO" (referencing the droids from Star Wars).

"Everyone will have the best medical care, food, home transport and everything else. Sustainable abundance," he enthused.

AFP via Getty Images

AFP via Getty Images

There is a scary side, of course. Could the tech be hijacked by terrorists and used as an enormous weapon, or what if it decides for itself that humanity is the cause of the world's problems and destroys us?

"If it's smarter than you, then we have to keep it contained," warned Tim Berners Lee, creator of the World Wide Web, talking to the BBC earlier this month.

"We have to be able to switch it off."

Getty Images

Getty Images

Governments are taking some protective steps. In the US, where many leading AI companies are based, President Biden passed an executive order in 2023 that required some firms to share safety test results with the federal government - though President Trump has since revoked some of the order, calling it a "barrier" to innovation.

Meanwhile in the UK, the AI Safety Institute - a government-funded research body - was set up two years ago to better understand the risks posed by advanced AI.

And then there are those super-rich with their own apocalypse insurance plans.

"Saying you're 'buying a house in New Zealand' is kind of a wink, wink, say no more," Reid Hoffman previously said. The same presumably goes for bunkers.

But there's a distinctly human flaw.

I once met a former bodyguard of one billionaire with his own "bunker", who told me his security team's first priority, if this really did happen, would be to eliminate said boss and get in the bunker themselves. And he didn't seem to be joking.

Is it all alarmist nonsense?

Neil Lawrence is a professor of machine learning at Cambridge University. To him, this whole debate in itself is nonsense.

"The notion of Artificial General Intelligence is as absurd as the notion of an 'Artificial General Vehicle'," he argues.

"The right vehicle is dependent on the context. I used an Airbus A350 to fly to Kenya, I use a car to get to the university each day, I walk to the cafeteria… There's no vehicle that could ever do all of this."

For him, talk about AGI is a distraction.

Smith Collection/Gado/Getty Images

Smith Collection/Gado/Getty Images

"The technology we have [already] built allows, for the first time, normal people to directly talk to a machine and potentially have it do what they intend. That is absolutely extraordinary… and utterly transformational.

"The big worry is that we're so drawn in to big tech's narratives about AGI that we're missing the ways in which we need to make things better for people."

Current AI tools are trained on mountains of data and are good at spotting patterns: whether tumour signs in scans or the word most likely to come after another in a particular sequence. But they do not "feel", however convincing their responses may appear.

"There are some 'cheaty' ways to make a Large Language Model (the foundation of AI chatbots) act as if it has memory and learns, but these are unsatisfying and quite inferior to humans," says Mr Hodjat.

Vince Lynch, CEO of the California-based IV.AI, is also wary of overblown declarations about AGI.

"It's great marketing," he says "If you are the company that's building the smartest thing that's ever existed, people are going to want to give you money."

He adds, "It's not a two-years-away thing. It requires so much compute, so much human creativity, so much trial and error."

Asked whether he believes AGI will ever materialise, there's a long pause.

"I really don't know."

Intelligence without consciousness

In some ways, AI has already taken the edge over human brains. A generative AI tool can be an expert in medieval history one minute and solve complex mathematical equations the next.

Some tech companies say they don't always know why their products respond the way they do. Meta says there are some signs of its AI systems improving themselves.

Getty Images News

Getty Images News

Ultimately, though, no matter how intelligent machines become, biologically the human brain still wins.

It has about 86 billion neurons and 600 trillion synapses, many more than the artificial equivalents. The brain doesn't need to pause between interactions, and it is constantly adapting to new information.

"If you tell a human that life has been found on an exoplanet, they will immediately learn that, and it will affect their world view going forward. For an LLM [Large Language Model], they will only know that as long as you keep repeating this to them as a fact," says Mr Hodjat.

"LLMs also do not have meta-cognition, which means they don't quite know what they know. Humans seem to have an introspective capacity, sometimes referred to as consciousness, that allows them to know what they know."

It is a fundamental part of human intelligence - and one that is yet to be replicated in a lab.

Top picture credits: The Washington Post via Getty Images/ Getty Images MASTER. Lead image shows Mark Zuckerberg (below) and a stock image of an unidentified bunker in an unknown location (above)

BBC InDepth is the home on the website and app for the best analysis, with fresh perspectives that challenge assumptions and deep reporting on the biggest issues of the day. And we showcase thought-provoking content from across BBC Sounds and iPlayer too. You can send us your feedback on the InDepth section by clicking on the button below.